AWS Lambda & it's layered problems 🧵

This article focuses on how "layers" within AWS Lambda can sometimes become problematic & how we can solve this issue using containerized Lambda.

AWS Lambda a serverless compute service used for performing short running tasks, With lambda we can write code in JavaScript, Python, Java or Ruby and execute that code whenever we want or when a specific event happens.

For example, I am writing some logic to load data into a warehouse, like Google's BigQuery. To do this, we need the google-cloud-bigquery module. We use "layers" in Lambda to add this module to our Lambda environment.

We create a zip file containing the necessary dependencies, save it to an S3 bucket, and provide the file path to the layers.

mkdir my-lambda-layer && cd my-lambda-layer

mkdir -p aws-layer/python/lib/python3.11/site-packages

nano requirements.txt

(put your dependencies here, like: google-cloud-bigquery)

pip install -r requirements.txt --target aws-layer/python/lib/python3.11/site-packages

cd aws-layer

zip -r9 lambda-layer.zip .

Now, this should work. In fact, it will work! Since we only have a single module in our requirements.txt, the created zip file is smaller in size and will function correctly.

Actually, we can add up to five layer zip files in a Lambda function. This allows each layer to be small in size and enables us to add more modules. But…

THE PROBLEM

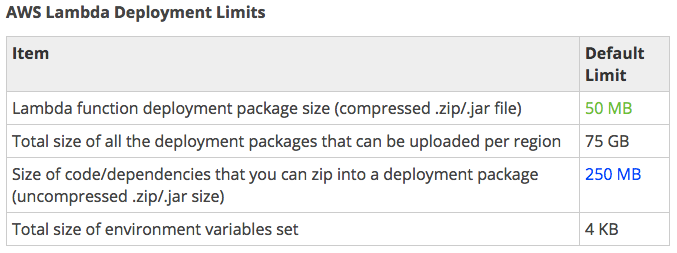

Lambda has some deployment size limitations!

As our project grows or if we want to use some machine learning models for certain Lambda functions, we might need many modules. This can exceed the allowed limits, which is a problem. Fortunately, there is a solution to this issue.

THE SOLUTION

This problem can be solved by deploying a Docker built Lambda function with AWS CDK ✨

There are some prerequisites for this solutions,

Firstly we need to install and configure our AWS CLI, for doing this here are the amazon docs, AWS CLI installation and configuration

Then we need to have Docker installed and fully configured and keep the daemon running throughout this process. Here’s a link for this Docker installation and configuration

We need to setup a CDK (Cloud development kit) installed and configured CDK installation and configuration

Once you have set up CDK, we need to set up the project,

mkdir cdk_docker_lambda && cd cdk_docker_lambda

cdk init --language python

source .venv/bin/activate

pip install -r requirements.txt && pip install -r requirements-dev.txt

cdk deploy

This should be our project structure,

After writing the code for our Lambda function, and writing our Dockerfile and stack properly (reference git repository for that, Lambda with Docker) we can test it locally:

Ensure the Docker daemon is running. Open your terminal, go to the root of the CDK application, and run this command:

docker build -t youtube-sentiment-final/lambda .This will create a Docker image. After that, we need to run this image as a container.To run our container, use:

docker run -p 9000:8080 -e AWS_ACCESS_KEY_ID="ACCESS_KEY" -e AWS_SECRET_ACCESS_KEY="SECRET_KEY" root_folder/lambda:latestTo test this, we will use a curl command:

curl -X POSThttp://localhost:9000/2015-03-31/functions/function/invocations-H 'Content-Type: application/json' -d '{YOUR JSON PAYLOAD }'

After testing our Lambda function locally, we can deploy it to our AWS account by running this command in our terminal: cdk deploy

Now, we can see our Lambda function deployed on our AWS dashboard. Congratulations 🎉

Here is the GitHub repository for all the code and reference, Lambda with Docker

Thanks for reading ✨ We hope you found these insights valuable. If you learned something new today, don't forget to share this knowledge with others who might benefit from it.

Let’s connect on, Twitter , Linkedin Find all the other important links at, Linktree

Until next time! Goodbye 👋🏻